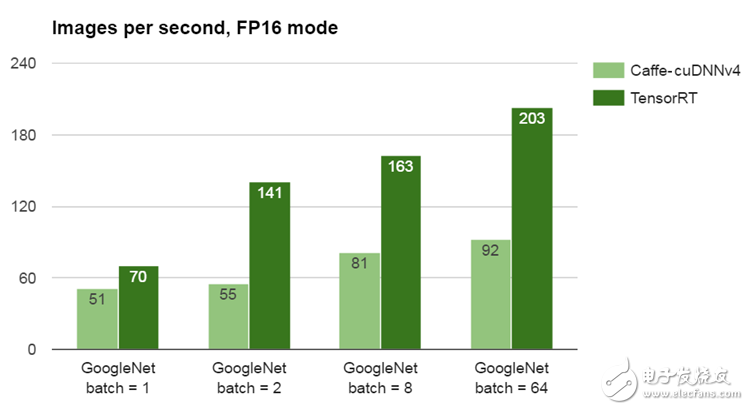

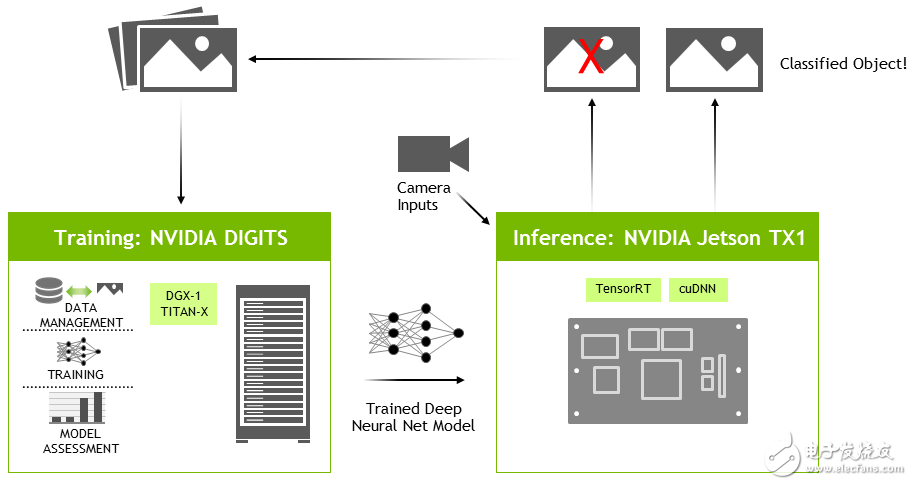

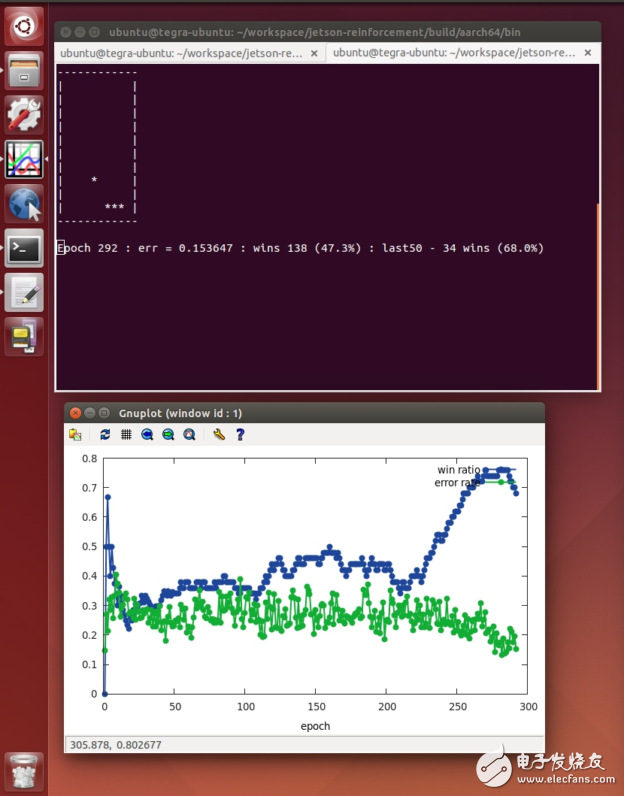

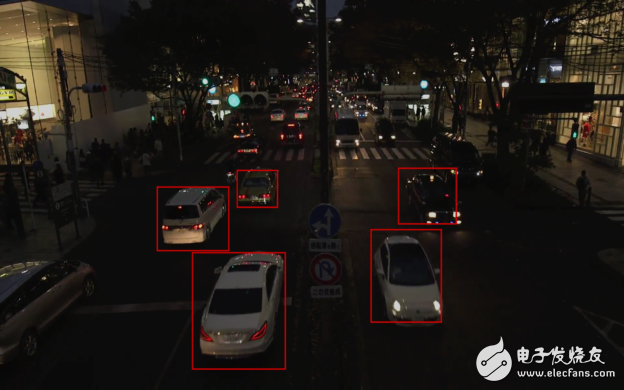

Deep neural networks (DNNs) are powerful ways to implement powerful computer vision and artificial intelligence applications. NVIDIA Jetpack 2.3 released today uses NVIDIA TensorRT (formerly known as GPU Inference Engine or GIE) to more than double the performance of DNNs in embedded applications . NVIDIA's 1 TFLOP / s embedded Jetson TX1 module can be deployed on drones and smart machines, and consumes 20 times more power than Intel i7 CPUs than inference workloads . Jetson and Deep Learning Power - The latest advances in autonomy and data analysis, such as the ultra-high performance Teal drones shown in Figure 1. JetPack includes a comprehensive set of tools and SDKs that simplify the process of deploying core software components and deep learning frameworks for host and embedded target platforms. JetPack 2.3 has a new API for efficient low-level camera and multimedia streaming using the Jetson TX1, as well as updates to the Linux For Tegra (L4T) R24.2 and Ubuntu 16.04 aarch64 and the Linux kernel 3.10.96. JetPack 2.3 also includes CUDA Toolkit 8.0 and cuDNN 5.1, which provides GPU acceleration support for Convolutional Neural Networks (CNN) and advanced networks such as RNN and LSTM . To efficiently stream data into and out of the algorithm pipeline, JetPack 2.3 added a new Jetson Multimedia API package to support the Khronos OpenKCam- based low-level hardware V4L2 codec and per-frame camera/ISP API . The tools included in JetPack lay the foundation for deploying real-time deep learning applications and their follow-up. See the complete software list below. To get you started, JetPack also includes deep learning examples and end-to-end tutorials on training and deploying DNNs. Now available with JetPack 2.3 for Linux and 64-bit ARM, NVIDIA TensorRT maximizes the performance of a neural network deployment in a Jetson TX1 or cloud deployment. After providing neural network prototext and trained model weights via an accessible C++ interface, TensorRT performs pipeline optimizations, including kernel fusion, layer autoscaling, and half-precision (FP16) tensor layouts to improve performance and improve system efficiency. For the concepts behind TensorRT and its graphical optimizations , see this parallel Forall article . The benchmark results in Figure 2 compare the inferencing performance of the Google Network image recognition network between GPU-accelerated Caffe and TensorRT. Both networks support FP16 expansion and are compared across a range of batch sizes. ( Compared with the FP32, the FP16 mode does not cause loss of classification accuracy.) The measure of performance is the number of images per second processed using GoogleNet using the nvcaffe/fp16 branch optimized by TensorRT or Caffe . The test uses a planar BGR 224×224 image and the GPU core clock frequency regulator is up to 998 MHz. The batch size indicates how many images the network processes at one time. The benchmark results of Figure 2 show that the inferred performance between TensorRT and Caffe improved more than 2 times at a batch size of 2 and the improvement of single images was greater than 30%. Although using batch size 1 can provide the lowest instantaneous delay on a single data stream, applications that process multiple data streams or sensors at the same time or perform windowed or region of interest (ROI) subsampling may cause Batch size 2. Applications that support higher-volume (for example, 8, 64, or 128) applications, such as data center analytics, can achieve higher overall throughput. Comparing power consumption shows another advantage of GPU acceleration. As shown in Figure 3, the Jetson TX1 with TensorRT is 18 times more efficient for deep learning reasoning on Intel i7-6700K Skylake CPUs running Caffe and MKL 2017. The result of FIG. 3 is determined by dividing the measured GoogleNet image per second by the power consumption of the processor during the reference period. These results use a batch size of 2, although the batch size of 64 Jetson TX1 can be at 21.5 Google Net images/sec/watt. Starting from the network layer specification (prototype file), TensorRT performs optimizations at the network layer and higher layers; for example, it integrates cores and processes more layers at a time, saving system resources and memory bandwidth. By connecting the TensorRT to the camera and other sensors, real-time data of the deep learning network can be evaluated in real time. It is useful for implementing navigation, motion control, and other autonomous functions. Deep learning greatly reduces the amount of hard-coded software required to implement complex intelligent machines. See this GitHub repo for a tutorial on using TensorRT to quickly identify the Jetson TX1 car camera's objects and to locate the pedestrian coordinates in the video input. In addition to rapid assessment of neural networks, TensorRT can be effectively used with NVIDIA's DIGITS workflow for interactive GPU-accelerated network training (see Figure 4). DIGITS can be run in the cloud, or it can be run locally on the desktop, and Caffe or Torch can be used to provide easy configuration and interactive training of network training. There are multiple DIGITS walkthrough examples that you can use to start training your network with your own data set. DIGITS saves model snapshots (through training data) at each training session. The required model snapshot or .caffemodel and network prototype file specifications are copied to the Jetson TX1. TensorRT loads and parses network files and builds an optimized execution plan. Using DIGITS and DGX-1 supercomputers for training, and TensorRT on Jetson, NVIDIA's complete computing platform enables developers everywhere to deploy advanced artificial intelligence and scientific research with end-to-end deep learning solutions. CUDA Toolkit 8.0 contains the latest updates to the Jetson TX1 with integrated NVIDIA GPU CUDA. The host compiler support has been updated to include GCC 5.x. The NVCC CUDA compiler has been optimized and the compilation speed has increased by a factor of two. CUDA 8 includes nvGRAPH, a new library of GPU-accelerated graphics algorithms , such as PageRank and single-source shortest paths. CUDA 8 also includes a new API for the use of half-precision floating-point operations (FP16) in CUDA cores and libraries such as cuBLAS and cuFFT. The cuDNN, CUDA Deep Neural Network Library version 5.1 supports the latest advanced network models such as LSTM (Long Term Short Term Memory) and RNN (Recurrent Neural Network). See this parallel Forall now supports cuDNN's RNN mode, including ReLU, Gated Cycle Unit (GRU) and LSTM. cuDNN has been incorporated into all of the most popular deep learning frameworks including Caffe, Torch, CNTK, TensorFlow, and more . Using Torch compiled with cuDNN binding, recently available networks (such as LSTM) support features in areas such as deep reinforcement learning where artificial agent learning is based on sensor status and feedback from reward functions to run online in real-world or virtual environments . By releasing depth-enhancing learners to explore their environment and adapt to changing conditions, artificial intelligence agents develop and understand complex predictions and intuitive human-like behaviors. There are many examples of virtual environments used to train AI agents in this OpenAI gym project. In environments with very complex state spaces (such as those in many real-world scenarios), deep neural networks are used by fortified learners to select the next action by estimating the future. Potential rewards based on sensory inputs (often called Q-learning And depth Q learning network: DQN). Since the DQN is usually very large in order to map the sensor status (eg, high resolution camera and LIDAR data) to the output of each potential action that the agent can perform, the cuDNN is critical for accelerating the enhanced learning network, so the AI ​​agent maintains interactivity. And can learn in real time. Figure 5 shows the output of the DQN I wrote for real-time learning on the Jetson TX1. The code for this example is available on GitHub , implemented using cuDNN binding in Torch, and has a C++ library API for integration into robot platforms such as Robot Operating Systems (ROS). In many real-world robotic applications and sensor configurations, the fully observable state space may not be available, so the network cannot maintain instant-aware access to the entire state of the environment. The GPU-accelerated LSTM from cuDNN is particularly effective at solving some of the observability issues, relying on LSTM-encoded memory to memorize previous experiences and chain the observations together. LSTM is also useful in natural language processing (NLP) applications with grammatical structures. JetPack 2.3 also includes the new Jetson Multimedia API package, providing developers with low-level API access for the flexible development of applications using the Tegra X1 hardware codec, MIPI CSI Video Ingest (VI) and image signal processor (ISP) program. This is in addition to the GStreamer media framework provided in previous versions. The Jetson multimedia API includes camera capture and ISP control, and Video4Linux2 (V4L2) for encoding, decoding, scaling, and more. These lower-level APIs provide better control over the underlying hardware blocks. The V4L2 supports access to video encoding and decoding equipment, format conversion, and scaling capabilities, including support for EGL and efficient memory streaming. The V4L2 for encoding opens up many features such as bit rate control, quality presets, low-latency encoding, time tradeoffs, motion vector maps, etc. for flexible and rich application development. By adding powerful error and information reports, frame skipping support, EGL image output, etc., the decoder functionality is significantly enhanced. The VL42 exposes Jetson TX1's powerful video hardware capabilities for image format conversion, scaling, cropping, rotation, filtering and multiple simultaneous stream encodings. To help developers quickly integrate deep learning applications with data stream sources, the Jetson Multimedia API includes a powerful example of using the V4L2 codec with TensorRT. The multimedia API package contains an example of an object detection network in FIG. 6, which is derived from GoogleNet and transmits pre-encoded H.264 video data through a V4L2 decoder and a TensorRT. Compared with the core image recognition, in addition to classification , object detection also provides a boundary position within the image, making it useful for tracking and obstacle avoidance. The multimedia API sample network comes from GoogleNet and has an additional layer for extracting the bounding box. At 960×540 half HD input resolution, the object detection network captures higher resolution than the original Google Net, while using TensorRT to retain real-time performance on the Jetson TX1. Other features in the Jetson Multimedia API package include ROI coding, which allows defining up to 6 areas of interest in a frame. This enables transmission and storage bandwidth optimization by allowing the allocation of higher bit rates only for the regions of interest. To further facilitate efficient streaming between CUDA and OpenGL-like APIs and EGLStreams, the NV dma_buf structure is exposed in the Multimedia API. Based on Khronos OpenKCam, the low-level camera/ISP API libargus provides fine-grained per-frame control of camera parameters and EGL stream output for efficient interoperability with GStreamer and V4L2 pipelines. The Camera API provides developers with low-level access to MIPI CSI camera video capture and ISP engine configuration. Sample C++ code and API references are also included. The following sample code snippet searches for an available camera, initializes the camera stream, and captures the video frame. The Jetson TX1 Development Kit includes a 5MP camera module with the aforementioned Howe OV5693 RAW image sensor. The ISP support for this module is now enabled via the Camera API or GStreamer plugin. Leopard Imaging's IMX185 can fully support the IMX185 2.1MP camera from Leopard Imaging via the camera/ISP API (see Leopard Imaging's Jetson Camera Kit ) . The ISP supports additional sensors through the preferred partner service . In addition, the USB camera, integrated with the ISP's CSI camera and ISP bypass mode RAW output CSI camera can be used for the V4L2 API. Looking forward, all camera device drivers should use the V4L2 Media Controller Sensor Core Driver API - see the V4L2 Sensor Driver Programming Guide for details and a complete example based on the Developer Kit camera module. Smart machines are everywhere JetPack 2.3 includes all the latest tools and components for deploying production-grade, high-performance embedded systems using NVIDIA Jetson TX1 and GPU technology. NVIDIA GPUs use the latest breakthroughs in deep learning and artificial intelligence to solve the major daily challenges. Using GPUs and tools in JetPack 2.3, anyone can start designing advanced AIs to solve practical problems. Visit NVIDIA's Deep Learning Institute for practical training courses and deep learning resources for these Jetson wiki resources . NVIDIA's Jetson TX1 DevTalk forum is also available for technical support and discussion with community developers. Download JetPack today and install the latest NVIDIA tools for Jetson and PC. Insulated Power Cable,Bimetallic Crimp Lugs Cable,Pvc Copper Cable,Cable With Copper Tube Terminal Taixing Longyi Terminals Co.,Ltd. , https://www.longyicopperterminals.com Figure 1: The ability to fly 85 mph, lightweight Teal drones with NVIDIA Jetson TX1 and instant deep learning.

Figure 1: The ability to fly 85 mph, lightweight Teal drones with NVIDIA Jetson TX1 and instant deep learning.  Figure 2: When running Google Net on Jetson TX1 with FP16 mode and batch size 2, TensorRT more than doubled Caffe's performance.

Figure 2: When running Google Net on Jetson TX1 with FP16 mode and batch size 2, TensorRT more than doubled Caffe's performance.  Figure 3: Jetson TX1 is 20 times more efficient than CPU in deep learning reasoning.

Figure 3: Jetson TX1 is 20 times more efficient than CPU in deep learning reasoning.  Figure 4: DIGITS workflow for training a network on a stand-alone GPU and deploying TensorRT on a Jetson TX1.

Figure 4: DIGITS workflow for training a network on a stand-alone GPU and deploying TensorRT on a Jetson TX1.  Figure 5: Deepen Q-learning Network (DQN) learning while running games and simulations on the Jetson TX1.

Figure 5: Deepen Q-learning Network (DQN) learning while running games and simulations on the Jetson TX1.  Figure 6: The modified GoogleNet network that ships with the Jetson Multimedia SDK detects car frames with full motion video.

Figure 6: The modified GoogleNet network that ships with the Jetson Multimedia SDK detects car frames with full motion video.